Ethical Concerns of Artificial Intelligence in Healthcare: What You Need to Know

- Get link

- X

- Other Apps

Ethical Concerns of Artificial Intelligence in Healthcare: What You Need to Know

Artificial Intelligence (AI) is transforming the healthcare landscape. From enhancing diagnostics to optimizing treatment plans, AI promises to revolutionize patient care. However, with these advancements come ethical challenges that demand attention and action.

In this blog, we’ll explore the major ethical concerns of AI in healthcare and discuss how we can address them responsibly.

1. Data Privacy and Security

AI systems thrive on data—lots of it. In healthcare, this means handling sensitive patient information like medical records, genetic data, and real-time monitoring.

The Concern:

How do we ensure that patient data remains secure and private? A data breach could have severe consequences, including identity theft, stigmatization, or misuse by third parties.

Solutions:

- Enforce strict data encryption and anonymization techniques.

- Comply with regulations like HIPAA and GDPR.

- Educate healthcare providers on secure data practices.

The Debate:

Should patients have the right to opt out of data sharing for AI purposes? While some advocate for patient autonomy, others highlight the potential loss of valuable insights for medical research.

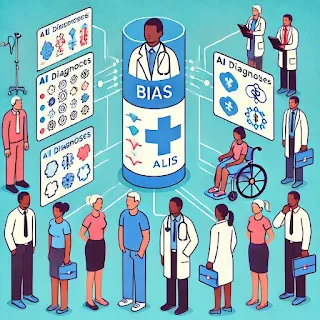

2. Bias in AI Algorithms

AI systems learn from historical data, but this data often reflects societal biases.

The Concern:

Bias in training data can lead to unequal treatment or misdiagnosis, especially for underrepresented groups. For example, an AI trained on data from predominantly white populations might fail to deliver accurate results for minorities.

Solutions:

- Use diverse and inclusive datasets.

- Regularly audit algorithms for biases.

- Involve diverse teams in AI development to avoid blind spots.

Real-World Example:

An AI system for skin cancer detection performed worse on darker skin tones because its training data lacked diversity.

|

| Protecting Patient Data: Ensuring Privacy and Security in AI-Powered Healthcare |

3. Transparency and Explainability

Many AI systems operate as "black boxes," making decisions without clear explanations.

The Concern:

If healthcare professionals and patients don’t understand how an AI reached a decision, trust diminishes. Moreover, accountability becomes murky when mistakes happen.

Solutions:

- Develop Explainable AI (XAI) systems that provide insights into decision-making processes.

- Establish clear accountability frameworks to determine who is responsible for errors.

4. Informed Consent

Patients have the right to know how their data is being used and whether AI is part of their care.

The Concern:

Informed consent becomes murky when AI systems operate behind the scenes. Patients might not fully understand the role AI plays in their diagnosis or treatment.

Solutions:

- Clearly communicate AI’s involvement in patient care.

- Update consent forms to include AI usage in plain language.

5. Job Displacement

AI can automate many tasks traditionally performed by healthcare workers.

The Concern:

Will AI lead to widespread job losses in healthcare? Administrative roles and even some clinical tasks, like image analysis, are at risk of automation.

Solutions:

- Use AI to augment, not replace, human roles.

- Invest in reskilling programs for affected workers.

The Debate:

Can we achieve a balance where AI improves efficiency without harming employment? Collaborative efforts between policymakers and healthcare organizations are essential.

6. Access and Equity

Advanced AI tools are often expensive, creating disparities in access.

The Concern:

Wealthier institutions may adopt cutting-edge AI, while underfunded hospitals struggle to keep up. This could exacerbate existing healthcare inequalities.

Solutions:

- Promote open-source AI solutions.

- Provide subsidies or grants for low-income healthcare facilities.

7. Over-reliance on AI

AI is powerful but not infallible.

The Concern:

Blindly trusting AI recommendations can lead to errors if the system fails or produces incorrect results. This over-reliance can erode critical thinking among healthcare professionals.

Solutions:

- Train professionals to critically evaluate AI outputs.

- Establish protocols for verifying AI-generated recommendations.

8. Ethical Research Practices

AI accelerates research but introduces new ethical dilemmas.

The Concern:

How do we ensure that AI-driven research respects patient rights? For example, using genetic data without proper consent could lead to ethical violations.

Solutions:

- Enforce strict ethical guidelines for AI research.

- Conduct regular reviews by independent ethics boards.

The Debate:

Should private companies profit from AI insights derived from public health data? Some argue profits should be reinvested into public health systems.

|

| Addressing Bias in Healthcare AI: Striving for Fair and Equitable Outcomes |

Conclusion: Navigating the Ethical Landscape

AI has the potential to transform healthcare for the better, but only if we address its ethical challenges. Transparency, fairness, and collaboration will be key to ensuring that AI serves everyone equitably and responsibly.

What are your thoughts on AI ethics in healthcare? Join the conversation and share your perspective!

- Get link

- X

- Other Apps

Comments

Post a Comment